👕 WTSMerch now available

🎟️ WTSFest 2026 tickets on sale for London, Portland, & Philadelphia

👕 WTSMerch now available

🎟️ WTSFest 2026 tickets on sale for London, Portland, & Philadelphia

Author: Emina Demiri-Watson

Last updated: 03/07/2024

May was eventful for SEOs in many ways! Google launched AI Overviews, and of course there was the infamous Google API leak.

Rand Fishkin received an email on May 5th from an anonymous source claiming to have access to a massive leak of API documentation from inside Google’s Search division. Rand meticulously examined the documents, cross-referencing them with known facts and consulting with SEO experts and former Google employees.

Mic King provided further analysis by digging deeper into the leaked documentation and shedding more light on the potential implications for search engine optimisation. Since then, we’ve had confirmation (of a kind) from a Google spokesman emphasising that it “lacks context”. And, the original anonymous source revealed himself to be Erfan Azimi.

The usual caveats apply: the leaks do not tell us what factors Google is using to rank web pages. But that doesn't mean we shouldn’t be digging deep into the documentation and seeing what we can learn from it.

If you haven’t done so already, I’d recommend checking out Dr Marie Haynes’ series of articles about the leak. She starts off by explaining what the documents which have been leaked actually are:

“These documents are not code that is used in Google’s systems. They are documents to help developers who are building with Google's AI on the Cloud Platform…. These aren’t ranking algorithms. Yet, we may be able to learn about ranking by studying them.”

I tend to agree. Just because it’s not an actual ranking document, doesn’t mean it is not useful.

This is not an article about ‘the truth’ or one that claims to know the secrets of the algorithm.

Instead, I’ll be digging deeper into what this documentation might teach us about how to optimise video content. After all, YouTube is the second-most visited site on the internet and it’s forecasted to grow by 24.9% (232.5 million users) between 2024 and 2029. At the same time, Google’s SERPs have become increasingly diverse as well, with videos being featured 24.6% of the time, sometimes appearing above the “10 blue links”.

From a personal point of view, I also wanted to see if the document is useful to help our recently launched video series “SEO’s Getting Coffee” become more visible in search.

Navboost is Google's advanced web search system that employs click data to either boost or demote rankings. The clue is in the name.

I first heard about NavBoost in 2020 during the DOJ Antitrust Trial where it was mentioned together with another supposed serving system called Glue. During the trial, Pandu Nayak, a VP of Search at Google, testified that Navboost has been around since 2005. Previously it tracked a rolling 18 months of click data, which has now been reduced to 13 months of data.

With this new leak exploding, it was time to dig deeper into it. And, what a rabbit hole it turned out to be.

Unsurprisingly, NavBoost is mentioned in reference to video content in the leaked documentation – YouTube in particular has always tracked user behaviour, and the algorithm is openly based on this. Elements such as: previous videos watched; matching based on similarity of videos - e.g. what you would likely watch based on previous behaviour; and time spent watching videos on a specific topic or a channel are all part of the personal recommendation algorithm on YouTube. In fact, YouTube specifically notes that they pay attention to the users and not the video.

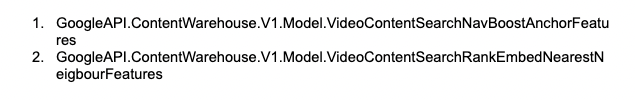

But how relevant is this when we are talking about video content in the Google SERPs? Two interesting places Navboost pops up in relation to video are both connected to anchors:

The YouTube attributes refer to three types of queries:

Let’s start with NavBoost queries. To help me understand what these might be, I used Mic King’s article on the leak.

To understand NavBoost, as Mic points out, you need to look back to the Panda update. Panda essentially creates a "modification factor" to adjust how important specific resources (like videos or web pages) are.

The Panda modification factor is calculated using:

The modification factor (M) is calculated using this formula:M=IL/RQ

IL is the number of different websites linking to your video, and RQ is the number of search queries that are related to your video.

I suspect that Reference Queries are likely what the documentation on YouTube refers to as NavBoost queries. They are search terms people have used in the past that are recognised as being related to your video.

For example, imagine you have a YouTube video titled "How to Bake a Chocolate Cake."

In this example, the system would calculate the modification factor (M) as follows:

M=10/50 =0.2

This factor helps the system understand how well-linked your video is compared to how often it is searched for. A higher modification factor would mean more independent links per reference query, potentially boosting the video's visibility in search results.

If we consider that something like this is indeed part of how video search works, then NavBoost queries are the search terms and navigation patterns that help the system understand which videos are related to which search queries.

For example, if people often search for "chocolate cake recipe" and your video frequently comes up as a top result, this query would be considered a NavBoost query.

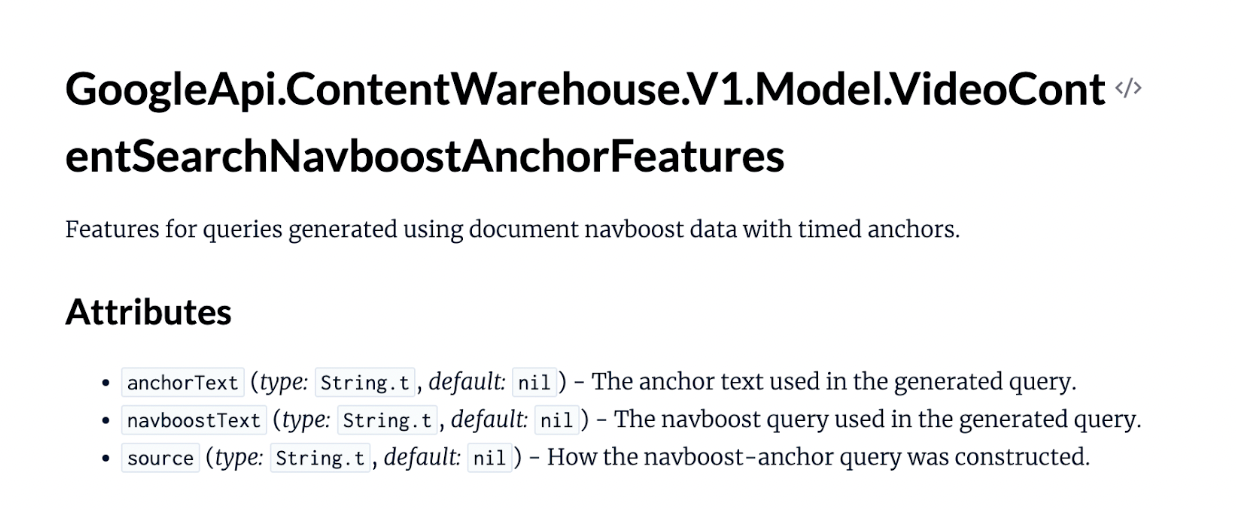

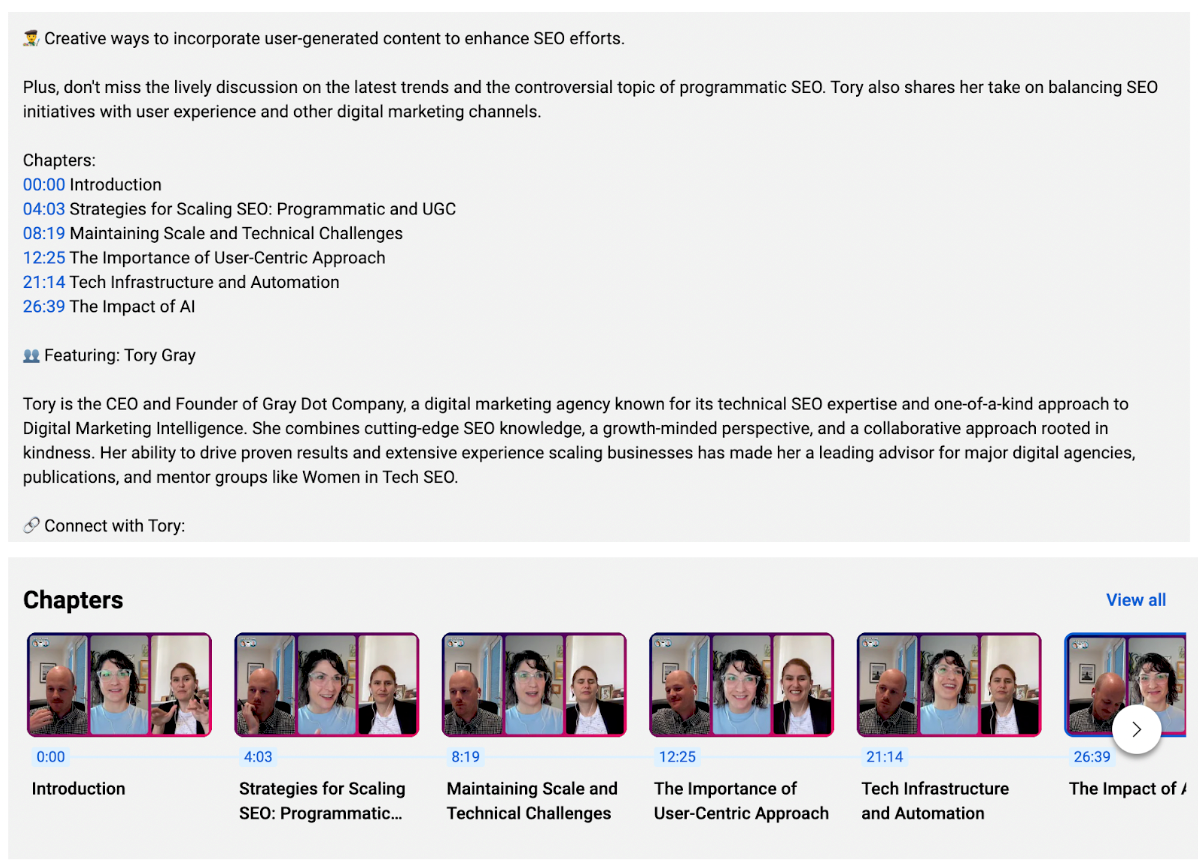

The second term I found interesting is ‘anchor queries’. I’ve never considered videos to have anchors and the only thing that comes to mind which seems plausible here are YouTube video chapters.

YouTube video chapters allow users to skip to different sections of the video:

Source: Example of chapters in our SEOs Getting Coffee podcast

Applied to our cake-baking example video - let's say it has the has the following chapters:

00:00 Introduction

00:45 Ingredients

02:30 Preparation

05:00 Cooking

07:45 Serving Tips

09:00 Conclusion

The user might want to skip the intro and go straight to the cooking chapter.

You’ll notice I added timestamps here. YouTube can automatically create chapters such as these or you can add these to your YouTube description manually and add the timestamps to tell YouTube how to make the sections.

If we go with the logic that anchors are video chapters, then anchor queries are those that are related to your video chapters.

Now that we know the main terms, let's look at the features themselves and what YouTube content creators can do to optimise their videos.

Anchor Text

Anchor text, is the chapter text (i.e. the names you give your video chapters). This is crucial because it identifies specific segments of a video that are particularly relevant to certain queries.

NavBoost Text

NavBoost Text refers to the NavBoost query text. Essentially it's the NavBoost query user interaction data + common navigation patterns) that enhances the chapters. This helps surface the most relevant parts of a video based on how users typically interact with the content.

Source

This is how the NavBoost query was generated. Yes, that’s likely the most murky part. My hunch is that this has to do with the reliability and accuracy of the NavBoost query. Possibly, there is some difference between the different ways they are generated that provides deeper context. Which in turn builds more or less trust?

The second interesting mention of NavBoost is related to rank embedding.

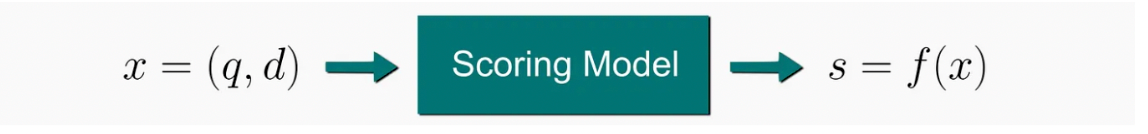

Rank embedding is a concept straight out of machine learning. The Rank part, as the name itself suggests, relates to sorting documents (in this case videos) based on a predicted relevance score to the query. That’s the simple explanation.

Behind this is of course a mathematical equation that’s not that simple.

Typically, ranking models predict a relevance score s = f(x) for each input x = (q, d) where q is a query and d is a document. Then the relevance score is what determines the sorting (the ranking). The higher the score, the better the rank.

Source: Learning to Rank: A Complete Guide to Ranking using Machine Learning

The second part that needs explaining is the concept of Embedding. This relates to one of the approaches used to implement the scoring model. Specifically, the Vector Space Model. I first heard of the concept of embedding in the excellent intro to LLM’s by Britney Muller.

Computers don't understand words like we do. Instead, every word is tokenized, translated into a string of numbers. These tokens are then converted into dense numerical vectors. This allows the computer to understand the relationships between the tokens (words).

Here’s a great example from Britney:

“Imagine every word or token being transformed into a multi-dimensional vector that captures its relationship with other words. This is the crux of embeddings. When we perform operations on these vectors, like the classic example of the vector representation for the word “King,”. —If you subtract the vector “man” from the vector for “King” and add the vector for “Woman,” it yields a strikingly similar vector for “Queen”.

Similarly, if you subtract the vector for “state” from that of “California” and add “country”, the closest vector might correspond to “America”. Pretty wild, right!? Keep in mind that these relationships aren’t always universally valid and are heavily influenced by the specific training data and algorithms used.”

Let’s now put the two terms back together again: Rank Embedding.

RankEmbed is an approach used in machine learning that basically relates to how documents are represented in a vector space. The distance between vectors represents the similarity or relevance between the items.

In the context of this particular model from the leak, rank embedding (rankembed) similarities measure how closely related different elements (such as video anchors, NavBoost queries, and original query candidates) are to each other within the context of search and recommendation algorithms.

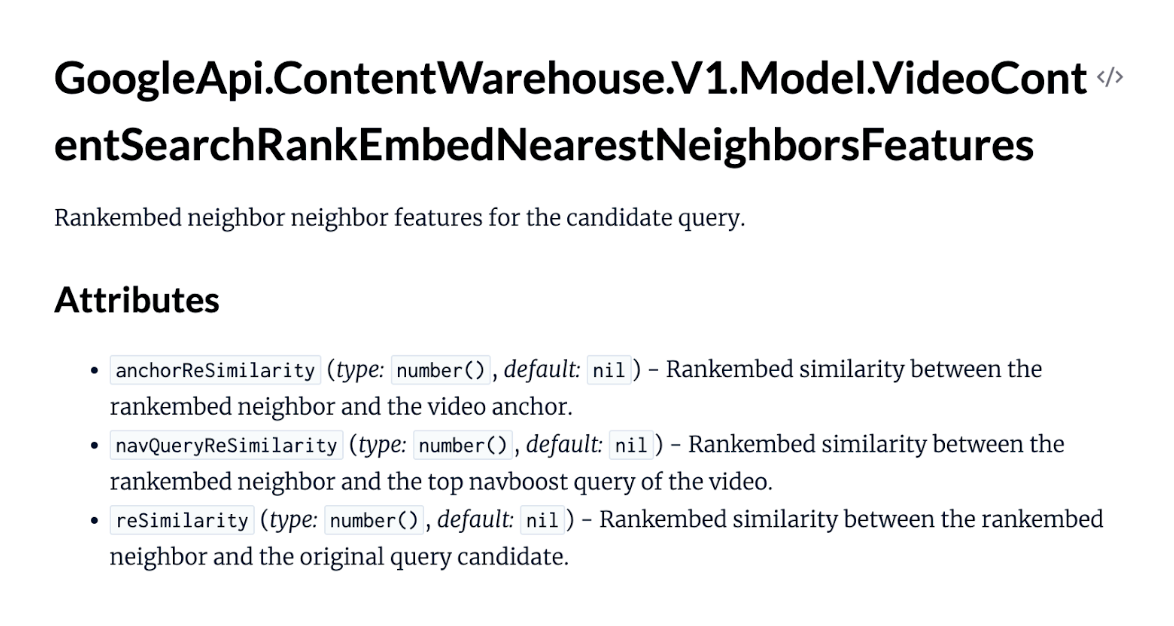

Each attribute is a clue to how this is done:

anchorReSimilarity - This attribute represents the RankEmbed similarity between the rankembed neighbour (a related or similar item in the rank embedding space) and the video anchor (specific segments or points within the video). High anchorReSimilarity means that your video chapters (anchors) closely match, while low anchorReSimilarity is the opposite.

navQueryReSimilarity - This is where the user aspects come into play. Coming back to what we said about NavBoost queries being those which are enhanced by user data, this measures the RankEmbed similarity between the RankEmbed neighbour and the top NavBoost query of the video.

reSimilarity - This represents the RankEmbed similarity between the rankembed neighbour and the original query candidate, i.e. the initial query that the user searched for.

Well we can't know exactly, but if we consider that NavBoost is part of the equation when it comes to your video chapters then there are a few things creators can do.

Don’t rely on YouTube to automatically set your chapters. Instead, think about how to break down your video into chapters in a way that will bring you more visibility. Create clear, concise segments that include relevant keywords and phrases. Check which keywords are popular and add those to your video chapters.

Make sure those keywords are included in spoken content, on-screen text, and subtitles. Then use timestamps in video descriptions to highlight key segments, making it easier for YouTube to identify and use these optimised anchors in search results.

This one is a no-brainer. NavBoost is all about user behaviours and if your content is flat to start with it simply won't work. Create content that keeps viewers engaged. Higher engagement rates signal to YouTube that the content is valuable.

Go beyond simply thinking about an engaging topic, and guide your viewers to engage. For example, you could include compelling hooks and clear calls to action at strategic points in the video. This will not only get you visibility of YouTube, something we already know, but it should boost your videos in search as well.

After your video has been up for a while, use data to refine this engagement. YouTube Analytics lets you analyse user retention and behaviours. This will help you understand which chapters are most engaging. Those are the ones you should optimise even further.

Encourage user interaction through comments, likes, and shares. This will provide additional data for algorithms to understand and enhance the relevance of your content.

Make sure your video's metadata is comprehensive and accurate. This includes titles, descriptions, tags, and closed captions. Interacting with your audience through comments and community posts can help generate more data points for use in NavBoost queries.

Pay attention to your transcripts and subtitles. Even when using auto-creation, make sure you check the most important chapters and the keywords connected to them. You don’t want those to be misspelt.

Our initial video "How to Bake the Perfect Chocolate Cake" had the following video chapters.

Now let’s optimise it:

Those chapters are not optimised currently. We can add some keywords into them that will help the system understand the video better and how it relates to other terms in this space.

Example: Rather than simply using Ingredients we can use something like: "00:45 Ingredients: Best Chocolate for Baking”

We’ve optimised the chapters in the description. Now let's look at how we can push this further with NavBoost queries.

There are 3 ways we can do this:

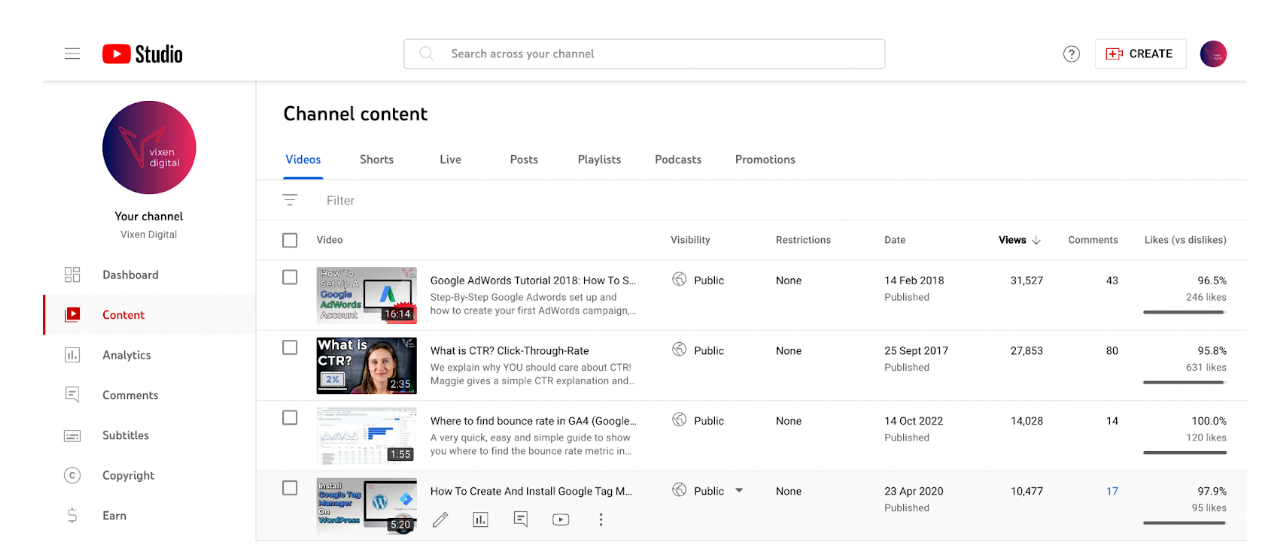

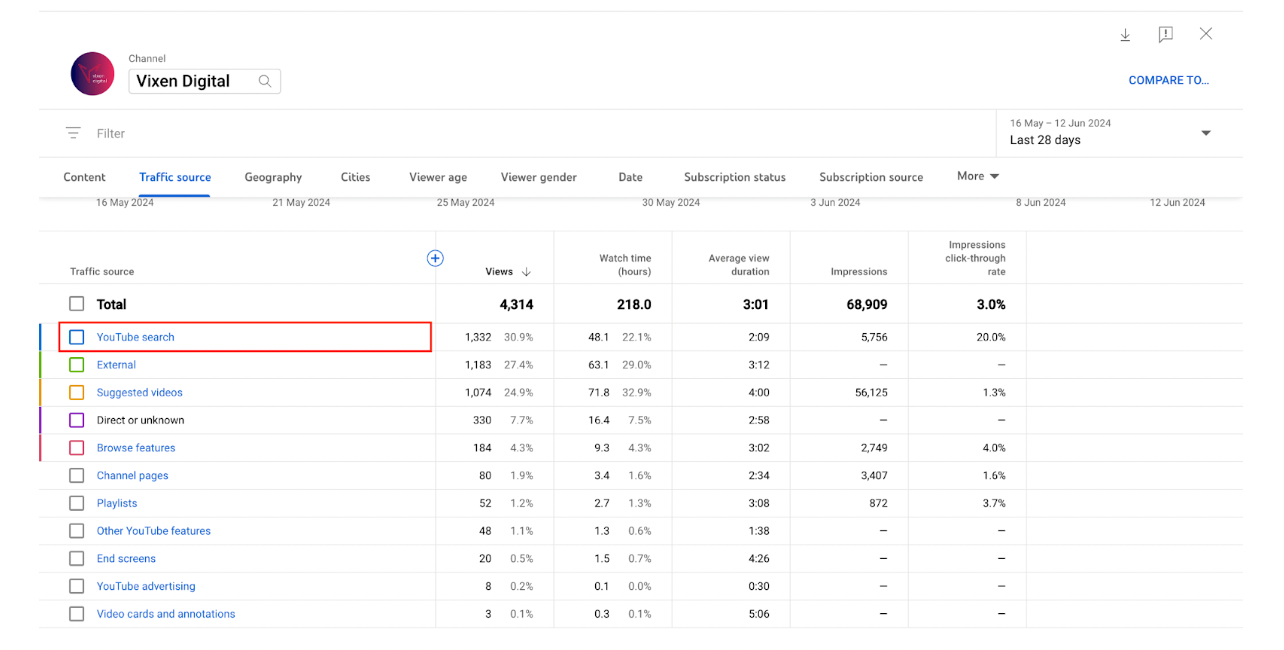

Step 1: Go to YouTube Studio

Example of YouTube Studio Dashboard Vixen Digital

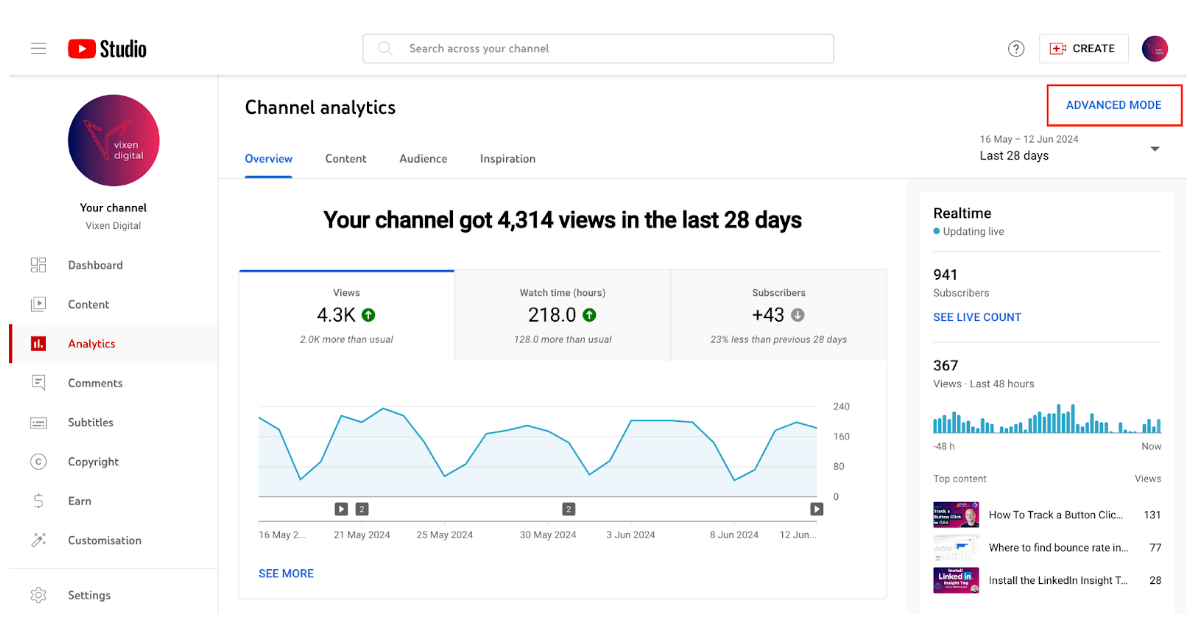

Step 2: Navigate to the Analytics section and click on Advanced Mode in the right corner

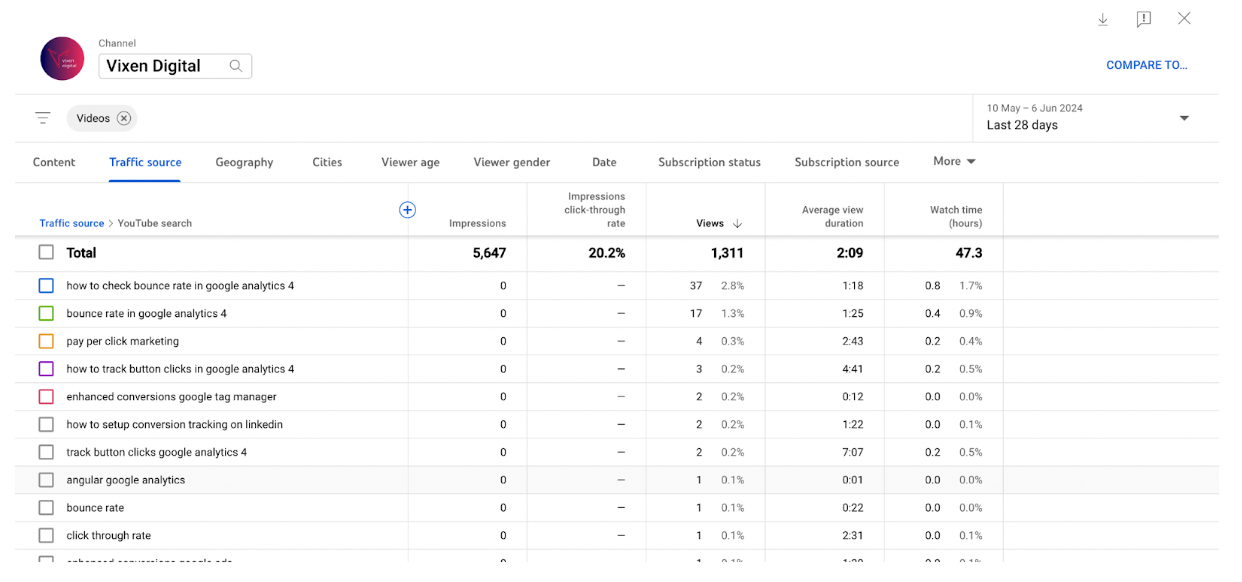

Step 3: Check the Traffic Source and choose YouTube Search. Digging into the rest of the sources can also be useful but unfortunately, Google Search that’s listed under External won’t give you a query breakdown.

Step 4: Check the search terms that led viewers to your videos.

Example: one of our videos. This is for a GA4 tutorial. The next step for us is to review, combine with other keyword research and optimise into chapters.

Applying it to our delicious cake-baking video example, let’s say we noticed that the search "easy chocolate cake tutorial," is popular. We can now make sure one of our video chapters includes this phrase and we can add it into the description.

We now know that engaged users help our rankings. Let’s make sure our baking video actually engages people!

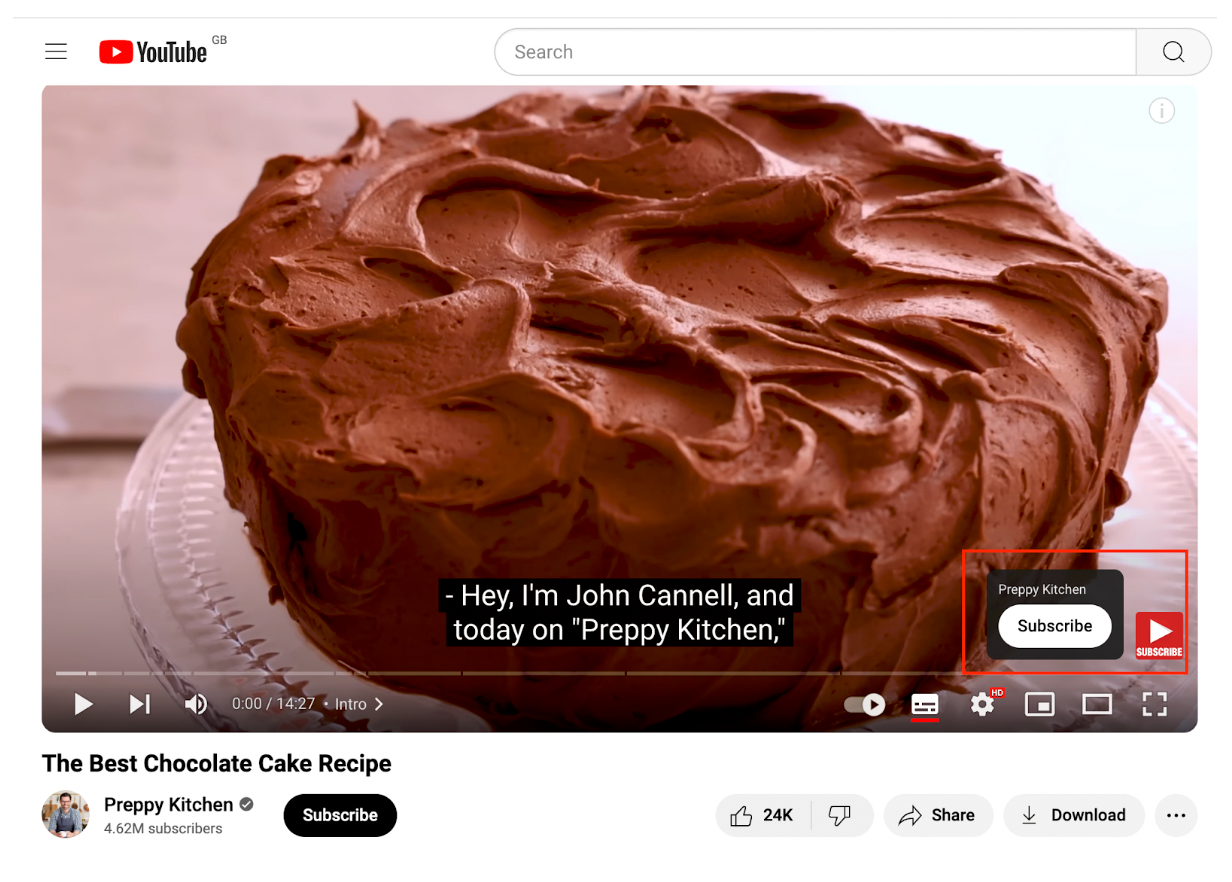

We’ll edit our video and ask viewers to comment on their favourite cooking chocolate brands during the "Ingredients" segment. Using YouTube Analytics, we know the Cooking chapter is the most engaging one for viewers. So we’ll add a subscribe CTA to it. Or better yet, let’s add that “Subscribe to all of our videos” just like Preppy Kitchen has done.

The basic elements can be tweaked as well; for example, our title could be more comprehensive: "How to Bake the Perfect Chocolate Cake - Easy Chocolate Cake Recipe Tutorial."

We could also incorporate user navigation insights into the description. For example we might mention a phrase like: "cooking chocolate cake" within the video description.

I started digging into this because I understand the importance of a video strategy in today’s marketing and the prominence of YouTube in user journeys. And, I wanted more viewers to visit our SEO’s Getting Coffee podcast.

Will this leak completely change the strategies we employ in YouTube or Google Video Search? No.

But there is no doubt that the leak hides a lot of ideas for optimising our video content. Useful ideas which will help our viewers better understand, engage with, and enjoy our videos.

Emina Demiri-Watson - Head of Digital Marketing, Vixen Digital

Emina has over 10 years of digital marketing experience both in-house and agency side. She is the Head of Digital Marketing at a Brighton-based digital marketing agency Vixen Digital, and co-hosts the SEO Getting Coffee podcast.