👕 WTSMerch now available

🎟️ WTSFest 2026 tickets on sale for London, Portland, & Philadelphia

👕 WTSMerch now available

🎟️ WTSFest 2026 tickets on sale for London, Portland, & Philadelphia

Author: Lazarina Stoy

Last updated: 25/11/2024

The past year has further amplified the importance of diversifying traffic sources, and the need for companies to become truly omni-present when it comes to their content and brand presence. In this article, I will explore how users' search behaviors have changed (the devices they use, how and why they search for information, etc), and look at various ways you can transform your content with the help of Machine Learning (ML) APIs and tools.

Here’s what I’ll be covering:

Why you should consider investing in content transformation

Content transformation methods and implementation

How to responsibly scale your content transformation program in the age of AI

Search is changing. Not only how we search, but where we go to find information, and what devices we use to get us there.

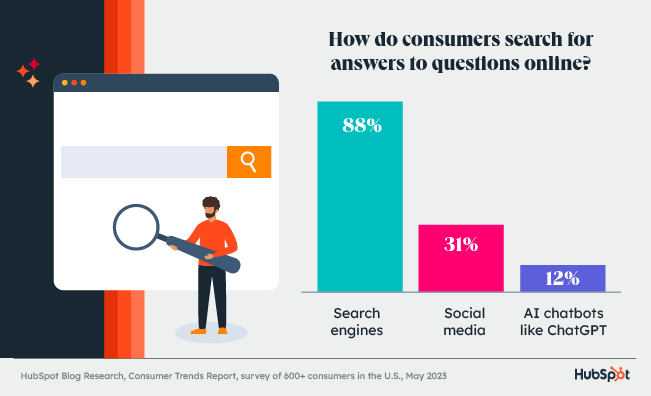

We now have more places than ever that we can go to to find information - not only search engines, but social media, and most recently - AI chatbots like ChatGPT. Research by Hubspot shows that 31% of people search on social media, while 12% now use AI chatbots to find answers.

How do consumers search for answers to questions online? - Hubspot Blog Research, 2023.

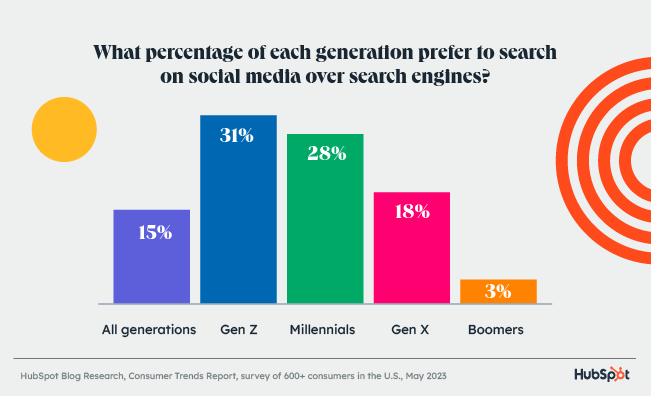

This research also shows the differences between different generations when it comes to social media searches, with nearly a third of Gen Z and Millenials (31% and 28%, respectively) preferring social media over search engines for searching for and finding information online.

What percentage of each generation prefer to search on social media over search engines? - Hubspot Blog Research, 2023.

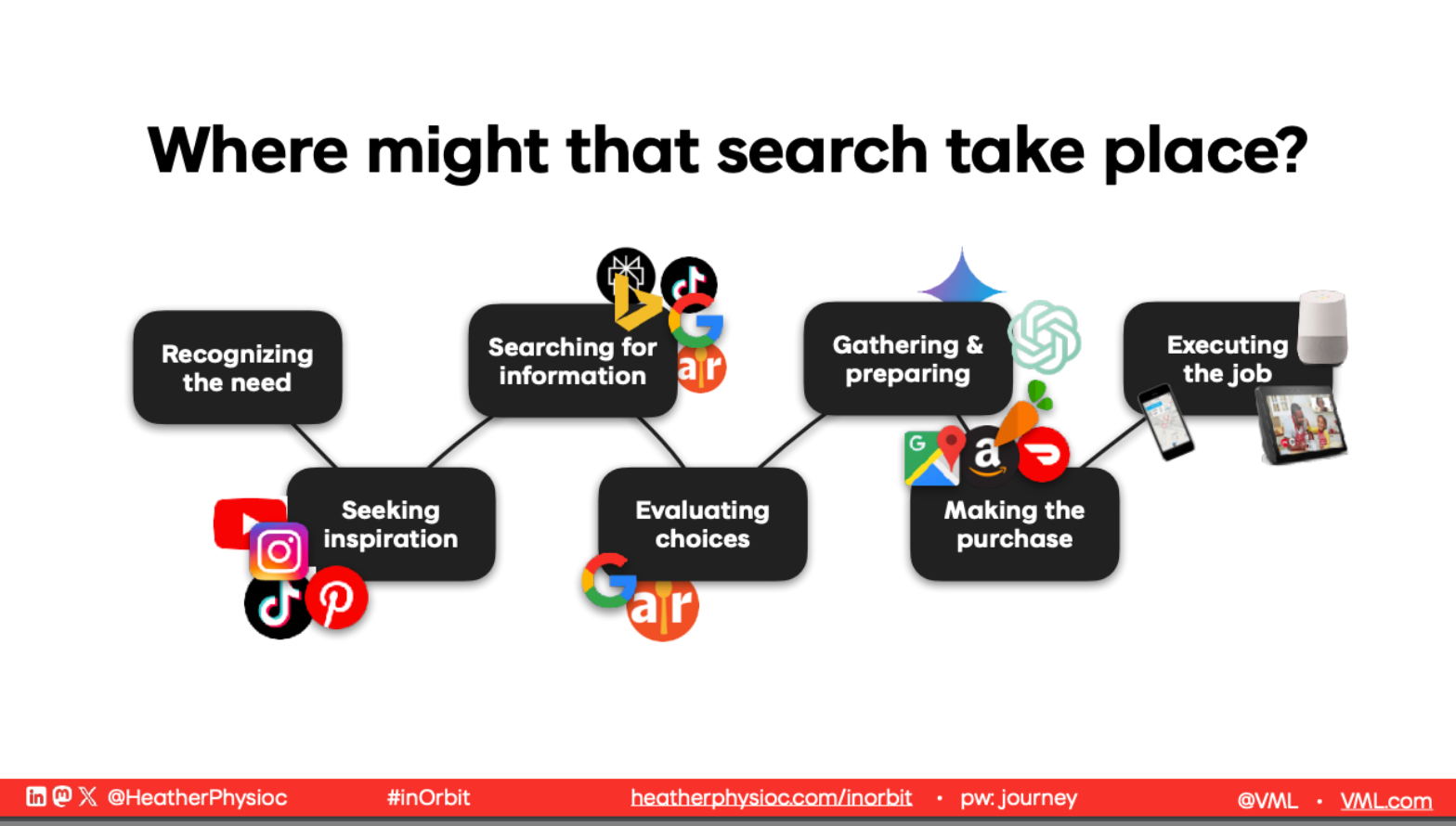

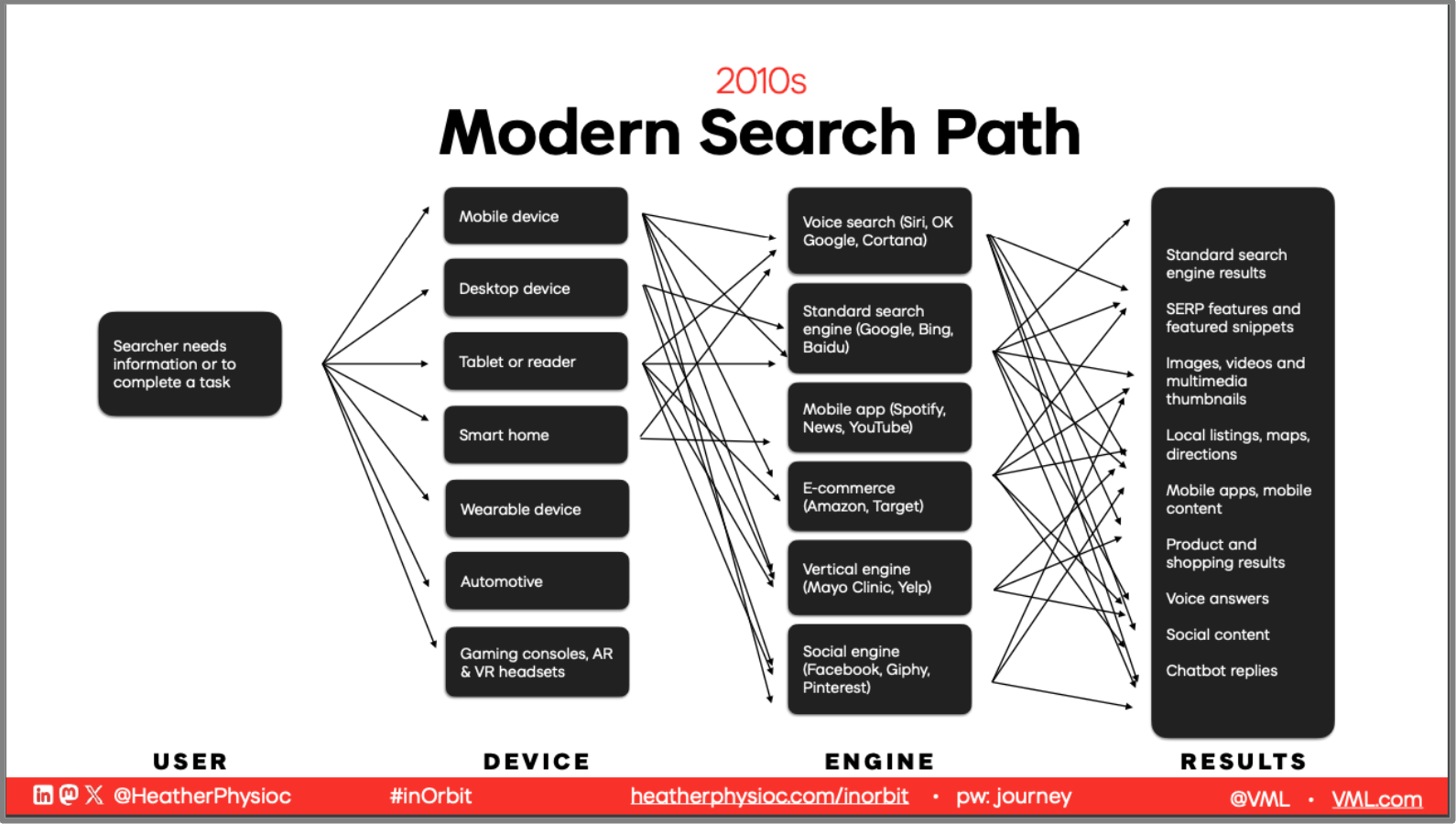

Another aspect that’s changing search behavior is that we now have access to various devices that we can perform searches on (e.g. our phone, laptop, tablet, Alexa, or even our car), and, as such the contexts in which we’re searching vary too – we’re searching while at work, while driving, while cooking, while watching TV, etc.

Where might searches take place? - Screen Capture from a conference presentation by Heather Physioc, 2024

In other words, our search journeys are longer and more widely distributed in terms of device, context, and search platform than ever before.

2010s Modern Search path - Screen Capture from a conference presentation by Heather Physioc, 2024

What this means is that the need for your brand to be omni-present is greater than ever:

And since I know how difficult and costly content transformation can become (and because I’m such a big advocate for machine learning implementation in organic search operations), I will share with you an overview of different methods of automating content transformation with the help of machine learning web tools and APIs.

Hopefully, by the end of this article, you will not only have the tools, but also the understanding of how to get started improving your content accessibility and cross-platform organic visibility, regardless of your currently-dominant brand content format.

Imagine you have a piece of text, and you want to change it into a different form, while still keeping the core message - that’s the easiest way to explain what text-to-text transformation is all about. Text-to-text transformation algorithms rewrite text according to certain rules or instructions. To illustrate further, text-to-text transformation models can be applied in:

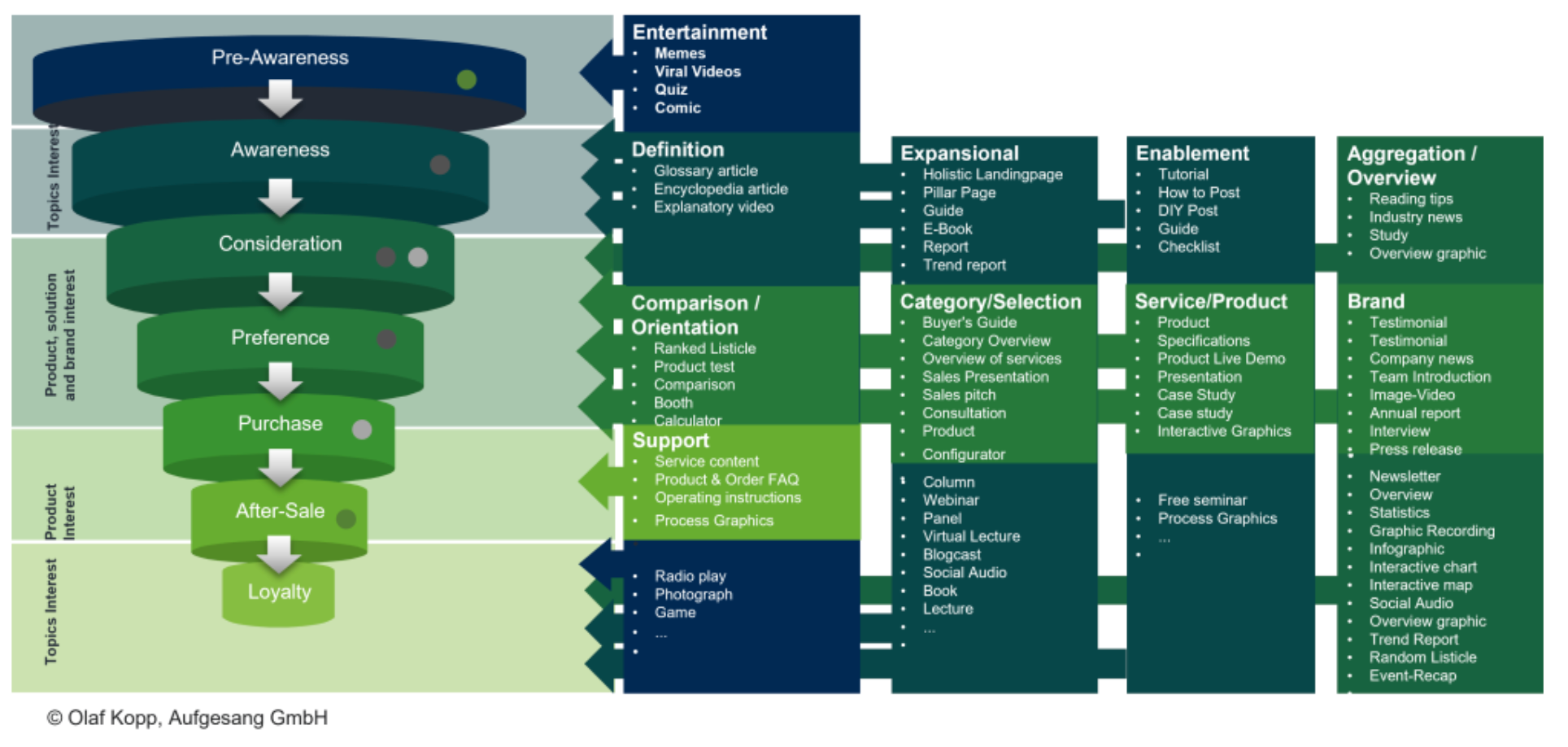

Text-to-text transformation can be incredibly powerful for speeding up the distribution of text-based content to other platforms. Referring to the graph by Olaf Kopp below, the goal we should have as marketers is to ensure that we can convey the brand message in as many content formats as possible, capturing multiple micro-moments. This makes our content work harder.

Classification of content types by micro-intents in the customer journey - Graph by Olaf Kopp, Aufgesang GmbH

Here are a few practical use cases for this type of transformation:

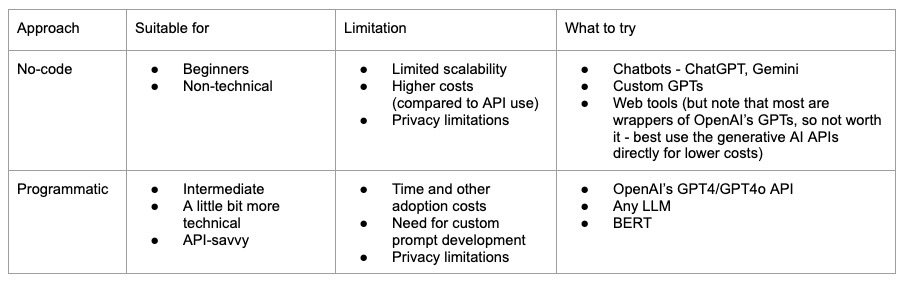

Here’s a rundown of tools and technologies you can use, and the suitability and limitations of each.

I highly recommend checking out Caitlin Hathaway’s Content Repurposer GPT for a quick-start with a no-code tool for content repurposing. Utilizing any LLM API would be a great place to start for programmatically scaling text-to-text transformation.

Text-to-speech technology (or TTS, for short) uses ML models to convert written text into spoken words. These models are trained to detect and replicate intricate patterns and nuances of human speech, allowing them to generate natural-sounding voices that can read out any given text.

Needless to say, this enables a ton of opportunities for platform enablement, like:

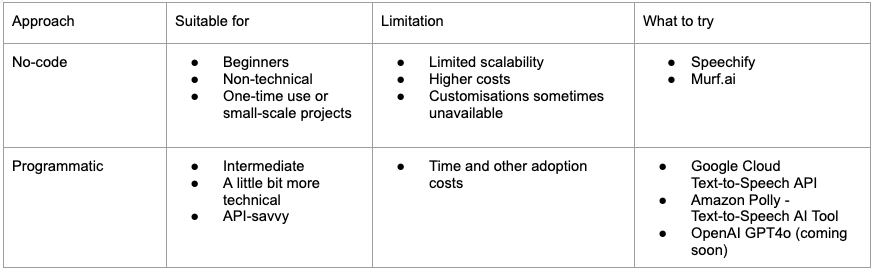

There are many TTS tools and platforms available, ranging from free to premium options, many of which offer not only different voices, but also voices with different dialects and personalities. Here are some of the main ones, alongside their ideal users and limitations.

Using a model like Google Cloud’s text-to-speech API can be as simple as selecting a language and voice from their library, customizing features like dialect and other voice characteristics, and bulk-processing text-files into audio.

Notably, there are also some web tools like Synthesia, the main function of which is creating a digital avatar that you can then use to create videos from a text input. I’d categorize tools like this as no-code, but they do require a video of you speaking to create a life-like digital avatar.

Speech-to-text (STT) algorithms convert spoken language into written text. Such algorithms work by analyzing sound waves to identify individual sounds and words, which are then matched to patterns, and stored in the model’s database to accurately transcribe sounds into text.

When it comes to how this technology can be applied in marketing, here are a few ideas:

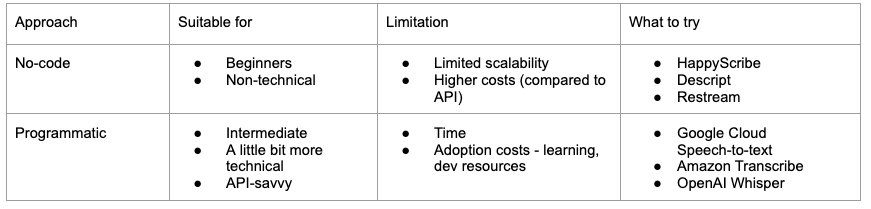

You can use a number of tools and technologies for STT transformation, each with specific limitations, and use cases.

I highly recommend checking out the programmatic approaches, as some like OpenAI’s speech-to-text functionalities can be incorporated into processes easily, even by complete beginners, with no coding experience.

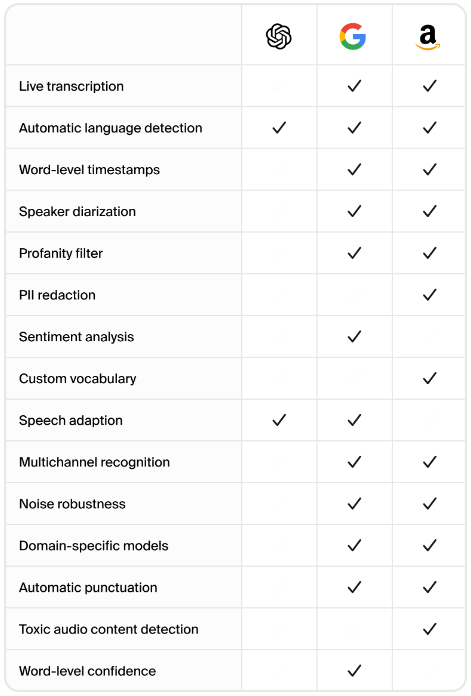

When it comes to the programmatic approaches mentioned, Gladia’s blog post includes some pretty interesting insights. In short, OpenAI, Cloud Speech-to-Text, and Amazon Transcribe all have automated punctuation features, and automatically detect language. Cloud Speech-to-Text and Amazon Transcribe have other advanced features making them more superior than OpenAI.

Comparison of different speech-to-text algorithms by OpenAI, Google Cloud, and AWS on over 15 model capabilities - by Gladia.io

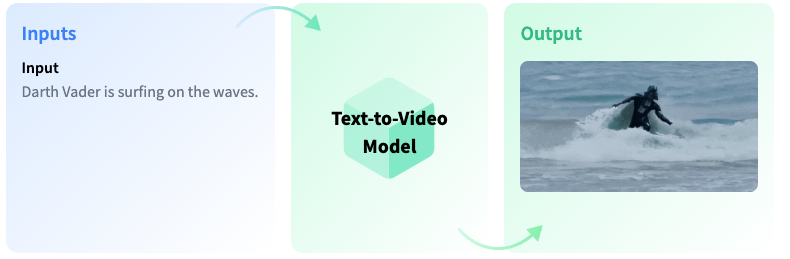

Text-to-video transformation takes words and transforms them into video. This is by far the most advanced and complex type of content transformation, and needless to say - truly, we’re not there yet from a technological standpoint. The aim for these types of models is to create full-blown videos from a prompt, or a script, including scenes and character design, music, and other stylistic elements.

Text-to-Video model concept, demonstrating the conversion from input (text) to output (video)

Creating text-to-video models is challenging as there isn’t enough paired text-video data to train the model on - mostly there are text-image pairs, which is why text-to-image models are improving at such a good rate in comparison. Computing a text-to-video model from scratch is not only costly, but time and computationally intensive. Meta has resolved this problem by utilizing existing text-to-image models as a foundation of their Make-A-Video Model, which was then trained on motion separately through unlabeled video datasets.

When it comes to generative AI text-to-video models, when you provide the text, NLP techniques are applied to break it down into key elements (like objects, actions, emotions), and then these are mapped to visual elements that match those descriptions, with motion applied.

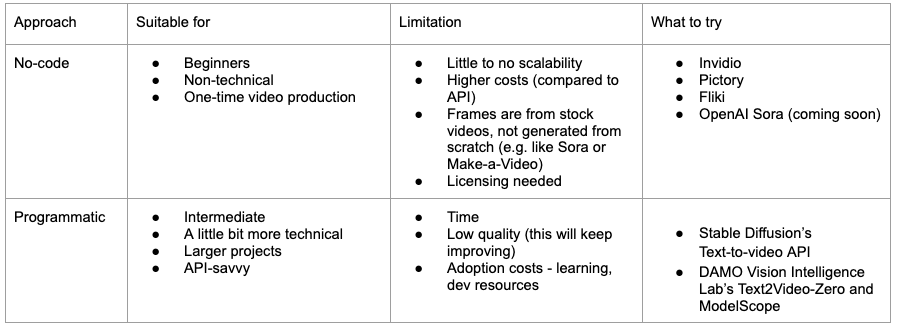

Examples of these types of models are OpenAI’s SORA, and Meta’s Make-A-Video, but neither have been officially released to the public. There is also another type of text-to-video software available, which takes the text you type in, and maps it to stock videos and images, with some slight image-based motion applied. Examples include web-based programs like Invidio.

Text-to-video technology can be revolutionary to so many marketing functions:

Let’s go over some of the main contenders in terms of tools in this category.

I highly recommend this blog post by Hugging Face for a further deep-dive and demos of existing models. My personal opinion on text-to-video software and APIs is that they would be able to supplement a person-to-camera set-up, but not replace the need of it entirely, especially with brand content, targeting informational and commercial intent.

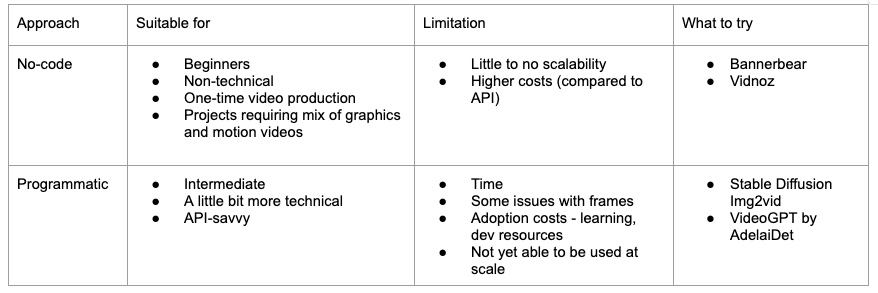

In contrast to text-to-video, image-to-video is a bit easier to execute. The idea is to envision the motion in the provided image, which is only the second step in text-to-video. The way the machine learning models work here is by understanding spatial and temporal patterns – they not only understand image content but also predict the logical motion that might follow based on the image content identified.

In short, API and web image-to-video tools can analyze the content of an image, identify key objects and patterns, and then apply various techniques to create a dynamic video sequence.

The underlying technologies used are generative adversarial networks (GANs), and deep neural networks to translate still images into videos by predicting probable inter-frame transitions.

Here are some applications of this technology in marketing:

Let me introduce you to the tools you can get started with image-to-video transformation with.

Before I conclude this article, I want to offer some advice on making the best use of these transformation technologies:

Due to the great ROI and productivity promises that ML-enabled automation has in many areas of business, there is a huge pressure for marketers to embrace automation. However, I believe strongly that we should do so in a way that’s responsible.

When incorporating automated content transformation programs, always keep the following in mind:

Know where to draw the line before things become… freaky, robotic, or inauthentic!